ApiTestEngine QuickStart

Introduction to Sample Interface Service

Along with this project, I devised a sample interface service, and you can use it to familiarize how to play with ApiTestEngine.

This sample service mainly has two parts:

- Authorization, each request of other APIs should sign with some header fields and get token first.

- RESTful APIs for user management, you can do CRUD manipulation on users.

As you see, it is very similar to the mainstream production systems. Therefore once you are familiar with handling this demo service, you can master most test scenarios in your project.

Launch Sample Interface Service

The demo service is a flask server, we can launch it in this way.

| |

Now the sample interface service is running, and we can move on to the next step.

Capture HTTP request and response

Before we write testcases, we should know the details of the API. It is a good choice to use a web debugging proxy tool like Charles Proxy to capture the HTTP traffic.

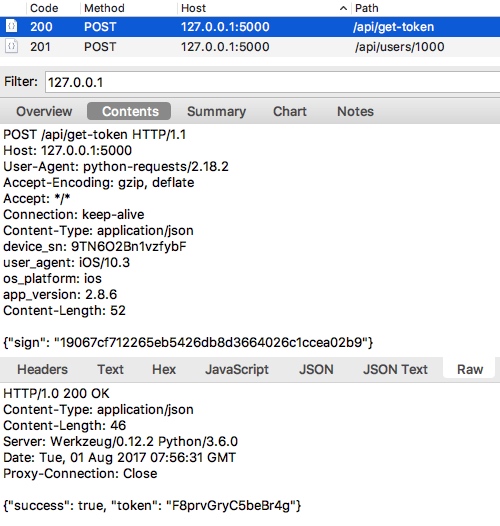

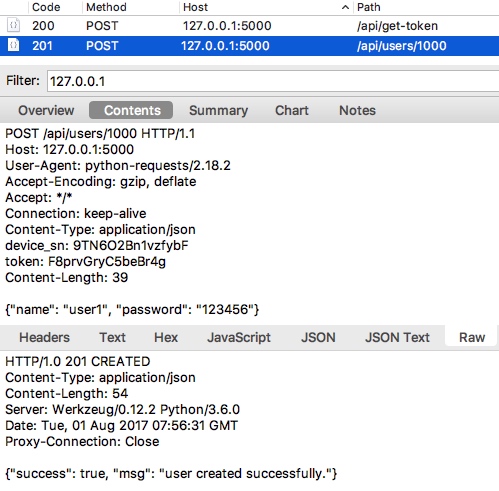

For example, the image below illustrates getting token from the sample service first, and then creating one user successfully.

After thorough understanding of the APIs, we can now begin to write testcases.

Write the first test case

Open your favorite text editor and you can write test cases like this.

| |

As you see, each API request is described in a test block. And in the request field, it describes the detail of HTTP request, includes url, method, headers and data, which are in line with the captured traffic.

You may wonder why we use the json field other than data. That’s because the post data is in JSON format, when we use json to indicate the post data, we do not have to specify Content-Type to be application/json in request headers or dump data before request.

Have you recalled some familiar scenes?

Yes! That’s what we did in requests.request! Since ApiTestEngine takes full reuse of Requests, it inherits all powerful features of Requests, and we can handle HTTP request as the way we do before.

Run test cases

Suppose the test case file is named as quickstart-demo-rev-0.yml and is located in examples folder, then we can run it in this way.

| |

Oops! The second test case failed with 403 status code.

That is because we request with the same data as we captured in Charles Proxy, while the token is generated dynamically, thus the recorded data can not be be used twice directly.

Optimize test case: correlation

To fix this problem, we should correlate token field in the second API test case, which is also called correlation.

| |

As you see, the token field is no longer hardcoded, instead it is extracted from the first API request with extractors mechanism. In the meanwhile, it is assigned to token variable, which can be referenced by the subsequent API requests.

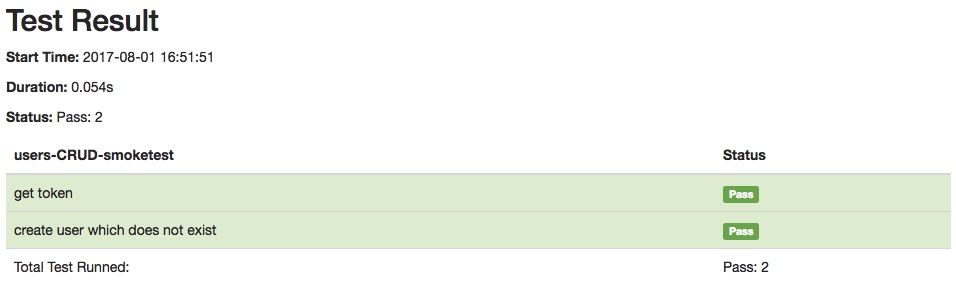

Now we save the test cases to quickstart-demo-rev-1.yml and rerun it, and we will find that both API requests to be successful.

Optimize test case: parameterization

Let’s look back to our test set quickstart-demo-rev-1.yml, and we can see the device_sn field is still hardcoded. This may be quite different from the actual scenarios.

In actual scenarios, each user’s device_sn is different, so we should parameterize the request parameters, which is also called parameterization. In the meanwhile, the sign field is calculated with other header fields, thus it may change significantly if any header field changes slightly.

However, the test cases are only YAML documents, it is impossible to generate parameters dynamically in such text. Fortunately, we can combine Python scripts with YAML test cases in ApiTestEngine.

To achieve this goal, we can utilize import_module_functions and variables mechanisms.

To be specific, we can create a Python file (examples/utils.py) and implement the related algorithm in it. Since we want to import this file, so we should put a __init__.py in this folder to make it as a Python module.

| |

And then, we can revise our demo test case and reference the functions. Suppose the revised file named quickstart-demo-rev-2.yml

| |

In this revised test case, we firstly import module functions in import_module_functions block by specifying the Python module path, which is relative to the current working directory.

To make fields like device_sn can be used more than once, we also bind values to variables in variables block. When we bind variables, we can not only bind exact value to a variable name, but also can call a function and bind the evaluated value to it.

When we want to reference a variable in the test case, we can do this with a escape character $. For example, $user_agent will not be taken as a normal string, and ApiTestEngine will consider it as a variable named user_agent, search and return its binding value.

When we want to reference a function, we shall use another escape character ${}. Any content in ${} will be considered as function calling, so we should guarantee that we call functions in the right way. At the same time, variables can also be referenced as parameters of function.

Optimize test case: overall config block

There is still one issue unsolved.

The device_sn field is defined in the first API test case, thus it may be impossible to reference it in other test cases. Context separation is a well-designed mechanism, and we should obey this good practice.

To handle this case, overall config block is supported in ApiTestEngine. If we define variables or import functions in config block, these variables and functions will become global and can be referenced in the whole test set.

| |

As you see, we import public Python modules and variables in config block. Also, we can set base_url in config block, thereby we can only specify relative path in each API request url. Besides, we can also set common fields in config request, such as device_sn in headers.

Until now, the test cases are finished and each detail is handled properly.

Run test cases and generate report

Finally, let’s run test set quickstart-demo-rev-4.yml once more.

| |

Great! The test case runs successfully and generates a HTML test report.

Further more

This is just a starting point, see the advanced guide for the advanced features.

- templating

- data extraction and validation

comparator